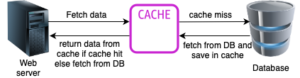

A cache is a hardware or software component that stores data so that future requests for that data can be served faster; the data stored in a cache might be the result of an earlier computation or a copy of data stored elsewhere. A cache hit occurs when the requested data can be found in a cache, while a cache miss occurs when it cannot. Cache hits are served by reading data from the cache, which is faster than recomputing a result or reading from a slower data store; thus, the more requests that can be served from the cache, the faster the system performs.

When a system writes data to cache, it must at some point write that data to the backing store as well. The timing of this write is controlled by write policy. Here are the basic writing approaches:

- Write-through

- In a write-through cache policy, every write operation (to the main memory or storage) is immediately mirrored in the cache. This ensures that the cache always contains an up-to-date copy of the data in the main memory/storage.

- Pros: Data consistency is guaranteed, and reads always return the most recent data. It simplifies cache management.

- Cons: Write operations can be slower since they require both a write to the cache and a write to the main memory/storage. This policy may not fully exploit cache benefits in terms of read speed.

- Write back

- In this policy, writes are initially made to the cache but not immediately propagated to the main memory or storage. They are only written to the main memory/storage when the cache is full or when a specific condition is met.

- Pros: Write operations are faster since they are initially performed only in the cache. It can help smooth out bursts of write traffic.

- Cons: Data consistency can be a challenge if the system crashes before cached writes are flushed to the main memory/storage. Care must be taken to ensure data integrity.

- Write-Around

- Write-around caching involves bypassing the cache for write operations. Data is written directly to the main memory or storage and is only cached on subsequent read operations.

- Pros: It prevents cache pollution with infrequently accessed data, ensuring that the cache remains focused on frequently read data.

- Cons: Initially, read operations on recently written data might be slower as it’s not in the cache.

Cache Invalidation

Cache invalidation refers to the process of marking cached data as invalid or stale, indicating that it should no longer be used because the data in the main memory/storage or the source of truth has changed. When new data is written to the main memory or storage, it may render the corresponding cached data obsolete. Cache invalidation ensures that the cache remains consistent with the source of truth.

There are several cache invalidation strategies:

- Time-based Invalidation: Cached data is marked as invalid after a certain period of time has elapsed (time-to-live). This approach is often used for data that has an expiration date, such as web pages or session data.

- Event-based Invalidation: Cached data is invalidated when a specific event or change occurs in the data source. For example, when a database record is updated, the corresponding cache entry is invalidated.

- Manual Invalidation: Developers explicitly mark cached data as invalid when they know that it has changed in the source. This provides fine-grained control over cache invalidation but requires careful management.

Cache Eviction

Cache eviction refers to the process of selecting and removing data from the cache to make space for new or more relevant data. When a cache reaches its capacity limit and needs to store new items, eviction policies determine which items should be removed.

Common cache eviction policies include:

- Least Recently Used (LRU): This policy removes the least recently accessed item from the cache. It assumes that the least recently used data is the least likely to be needed in the near future.

- Most Recently Used (MRU): This policy removes the most recently accessed item from the cache. It assumes that the most recently used data is less likely to be needed again immediately.

- Least Frequently Used (LFU): This policy removes the item with the least number of accesses. It aims to retain items that are accessed frequently.

- Random Replacement (RR): This policy selects a random item from the cache for eviction. While simple, it may not be the most efficient choice for all scenarios.

- First-In-First-Out (FIFO): This policy removes the oldest item added to the cache. It doesn’t consider how frequently items are used.

- Size-Based Eviction: In this approach, the cache is evicted based on the size of the items. When the cache reaches a certain size limit, the least essential items (often the largest ones) are removed.

The choice of cache invalidation and eviction policies depends on the specific use case and requirements. For example, a web page cache might use time-based invalidation and LRU eviction, while a database cache might rely on event-based invalidation and LFU eviction. The goal is to balance data consistency, cache hit rates, and resource utilisation effectively.