Problem Statement: Design a Machine Learning system to recommend nearby restaurants that the user may be interested in visiting (a Yelp like app for restaurants).

Clarifying Questions

The question asked here is very broad and ambiguous. Let’s ask a few clarifying questions to better understand the problem and the interviewer’s expectations. Some of the questions that would be helpful here are:

- Is this for a mobile app?

- This will help us understand if we can pre-generate recommendations. If the user’s location changes often (as in case of a mobile app), we may need to generate recommendations online.

- Is there an existing system in place?

- If there is an existing system in place, then we have a baseline to compare our new ML system.

- How many restaurants are there?

- This would help us understand the scale of our system.

- Is this app supposed to work globally?

- This would enable us to think of scale on a global level as well as dealing with differences in language and behaviour with respect to reviews, descriptions, etc.

- How many people are going to use the app?

- This is again related to scale.

- Do we have a database for restaurants?

- This is a very important information. If we don’t have a database of restaurants, we need to think about how to build it. In ML design interviews, the interviewer will most likely say that this database is available.

- Do we have any logged data for users?

- Another very important information needed to build our ML system. Again, most probably the interviewer will tell you that you have some logged user data available to be used. If not, you could suggest using the existing system (if there is one in use) to log user data for a month. If the system doesn’t exist, we could build a naive system based on some simple heuristic like showing restaurants based on their distance, with the closest one shown at the top.

Business objective

Thinking of business objective shows seniority, hands-on experience in working on real-world problems and sets you apart from other candidates.

- Create/improve the app such that we have a higher user engagement or user satisfaction (It could also be things like increase number of customers, ad revenue – if you have advertised listings, etc.).

- User engagement could be a combination of time spent, likes (if available), reviews, etc.

- User satisfaction could be measured by reviews or surveys.

Requirements

- Training

- User preferences may change regularly and new restaurants may become popular. So we need to train our model regularly. We could start with training weekly and change it to daily if the improvements in metrics justify the extra infrastructure costs.

- Inference

- For restaurant recommendations, it’s important to find the balance between exploration vs. exploitation. If the model over-exploits historical data, new restaurants might not get exposed to users. We want to balance between relevancy and new restaurants.

- For every user to visit the homepage, the system will have to recommend 100 restaurants for them (This number could vary depending on the location). The latency needs to be under 200ms, ideally sub 100ms.

Give an overview of the various components to discuss at this point: Training Data, Feature Engineering, Modelling, Evaluation, Deployment and Monitoring. Then proceed talking about each component. This shows organisation and structure to your approach, more typical of senior candidates.

Training data

- How to collect training data?

- Training data would consist of information about the user, restaurant and historical interactions. We could set-up new user sign-up and new restaurant sign-up options in the app itself. Any user who wishes to use the app needs to sign-in. Similarly, new restaurants could be added by their owner via the app (we could have a verification in place for adding new restaurants to avoid false data and misuse of the app).

- Most likely, the interviewer would have told you that there is an existing database of restaurants and users, which could be used as a starting point. Nevertheless, we could explain this approach for new users/restaurants.

- Monitoring users’ interaction with the app would help us get valuable signals and also come up with labels which could be used in the ML task.

- We would also need user location. If the user has location service turned off, we could display a message stating that the user needs to turn on the location service to use the app.

- Labelling positive and negative classes.

- Some of the signals we could consider here are user clicks, time spent on the page, user interactions (like, review, etc.). Depending on the business objective and what’s more important here, we could decide on which one to pick.

- If we decide to go with using user clicks as a signal, then a click would signal a positive example and an impression without a click would refer to a negative example. The downside here could be that some users may click by mistake, while others may just look at the listing they are interested in, without clicking.

- If time spent is more important for our business, we could decide on a threshold or have a config that could be altered based on some experiments and then instances where the user spends more time than the threshold would be labelled positive, otherwise negative. Challenges here would be first to figure out the right threshold, as it would vary greatly based on user. Also, what would happen if a user opened a listing and got busy with something else while the listing is open.

- Using likes and reviews could be directly mapped to labels. A user like would be positive label, whereas an impression without like would be negative. A review of 4-star or higher could be considered positive whereas anything lower as negative. This approach gives some direct control to the user but the downside here could be that many users may not like or review even when they liked the recommendation.

- We could also use a hybrid approach to combine two or more of the aforementioned approaches.

- We could start with a click-based task to keep things simple and have an ML system in place before experimenting with a hybrid approach.

- Ways to combine ML tasks like clicks, ratings, etc.

- As suggested above, we could start with a click-based task. We could incrementally add like-based, ratings-based, time-based tasks to the initial set-up, by combining recommendations from a couple of tasks to see if that significantly improves the results (measured via A/B tests).

- This would add extra infrastructure costs as well as increase the recommendation generation latency. We could run the pipelines corresponding to different tasks in parallel, still there would be some extra cost involved in combining the recommendations from different sources. This cost vs performance results would need to be analysed closely.

- Feedback loops in using logged data

- Focussing on clicks to start with gives a higher volume of user feedback to train and evaluate your model.

- We need to be aware of degenerate feedback loops, which can happen when the predictions themselves influence the feedback, which, in turn, influences the next iteration of the model. For example, in the initial iteration, say restaurants A and B are very close in terms of their scores but because A was originally ranked a bit higher, it showed up higher in the recommendation list, making users click on A more, which made the system rank A even higher. After a while, A’s ranking became much higher than B’s. This can cause the model to perform sub-optimally, perpetuate and magnify biases present in the data.

- To address this problem, we could use two techniques: randomisation and using positional features.

- Mixing random items with system recommended items helps reduce homogeneity and improve diversity.

- To use positional features to combat degenerate feedback loops, we can use two different models. The first model predicts the probability that the user will see and consider a recommendation taking into account the position at which that recommendation will be shown. The second model then predicts the probability that the user will click on the item given that they saw and considered it. The second model doesn’t concern positions at all.

- Class Imbalance

- We are using clicks to classify instances, which would be rarer compared to impressions, we’ll have a lot more negative samples compared to positive samples leading to class imbalance. This could cause problems if the data is highly imbalanced and there aren’t enough signals to learn the minority class.

- If class imbalance is deemed a problem after analysing the data, we could use resampling (oversampling the minority class or undersampling the majority class), but this could have its own issues like risk of losing important data after undersampling and risk of overfitting after oversampling.

- We could also use algorithmic methods to deal with this (for details, see Training Data).

- Ensembles seem to work well when there is class imbalance.

- Cold-start problem

- We would encounter this when we have no historical data for the model to use. This would happen when a new user signs up or a new restaurant gets added.

- One way to solve this problem is using representative approach. For a new user, we could show some contents on sign-up and deduce interest based on clicks or explicitly ask the user for some feedback (like or dislike). Similarly, for restaurants we could find similar restaurants and use that or boost newly added restaurants in recommendations.

Feature Engineering

- Features:

- User only features

- gender, age, location, demographics, metadata, user_interests, user_historical_visits

- Place only features

- location, popularity, cuisine, health_ratings, expense_category, is_fine_dining, reviews, ratings, number_of_ratings, clicks, clicks_in_last_24_hours, clicks_in_last_7_days, open_since, impressions, impressions_in_last_24_hours, impressions_in_last_7_days

- User-place interaction features

- user_place_historical_interactions_last_30_days, user_place_historical_interactions_last_6_months, user_place_historical_interactions_last_1_year, distance

- Context features

- time_of_the_day, day_of_the_week, season_of_the_year, is_holiday_season, month, upcoming_holidays, days_to_upcoming_holiday

- User only features

- Feature types and featurisation techniques:

- Numeric features

- For numeric features like clicks, number_of_ratings, days_to_upcoming_holiday, etc., first we would want to look at missing values and handle them.

- If a feature has too many missing values, we could consider removing the column. However, this might remove some important information and reduce the accuracy of the model.

- Also, if a sample has missing values, we could remove that row. This method can work when the missing values are completely at random and the number of examples with missing values is small.

- Even though deletion is tempting because it’s easy to do, deleting data can lead to losing important information and introduce biases into the model. We could fill missing values with default value or using mean, median or mode.

- It’s important to scale the features before inputting it into a model. We could do min-max scaling: x’ = (x – min(x)) / (max(x) – min(x)), which would output the values in the range: [0, 1]. Standardisation is another option which would make the values have zero mean and unit variance: x’ = (x – mean(x)) / std(x).

- Categorical features

- For categorical features like cuisine, month, season_of_the_year, ratings, etc., we could use One Hot Encoding (explained in this lesson). However, this would lead to complex computation and high memory usage for categories with high cardinality, if we are building a large scale system. Also, new categories may appear in production, and marking all of them into one category (others) may not be optimal.

- We could use the hashing trick to encode categorical features, by using a hash function to generate a hashed value of each category. The hashed value will become the index of that category. Because we can specify the hash space, we can fix the number of encoded values for a feature in advance, without having to know how many categories there will be, enabling new categories to appear in production without any issues.

- Collision is a problem with hash functions, especially in cases of small hash size. We can increase the hash size, but that will consume more memory. With hash function, the collisions are random and the impact of collided features isn’t bad in most cases.

- Embeddings

- We can generate embeddings for user and place features.

- We could pass user features to a trainable Neural Network (encoder), which can be a part of Two-tower network described later under Candidate Generation Service. We could do the same for place features.

- We can generate text embeddings for textual features like metadata, reviews, etc. using pre-trained models like word2vec, GloVe, etc. (We could also briefly mention bag of words and TF-IDF vectoriser, listings its disadvantages).

- Numeric features

Modelling

- There would be a lot of restaurants in the database and ranking all of them would be too slow and not relevant to the user as many of them wouldn’t be in the user’s location. Hence, we break the modelling part into three services: candidate generation, ranking and re-ranking.

- Candidate generation service

- The candidate generation service will find the relevant restaurants based on user location, user and place features and user’s historical interactions with restaurant listings.

- We can fetch a list of nearby restaurants based on user’s location. We could use a quad-tree structure to store the restaurants to make this retrieval efficient. We could select restaurants in a 20 KM radius. If there are more than 1000 restaurants within 20 KM, we can select the nearest 1000 restaurants.

- Two-tower Neural Network

- We could use user and place embeddings (that we get using encoders) and use the dot product to calculate a similarity score.

- The encoders are trained to produce embeddings such that the dot product of a user, place pair that actually interacted is high and the dot product of a non-interacting pair is close to 0.

- To get positive examples, i.e. examples of user and item pairs the network should learn to recommend, we can look at what a user has clicked in the past.

- We could sample from the list of restaurants that the user has been presented by the app but not clicked on by the user (negative impressions).

- Ranking service

- The ranking model will optimise for the click likelihood, i.e., restaurants that have a high click probability should be ranked higher.

- We could start with a simple logistic regression model. It would directly give you the probability score. It’s not very resource intensive, doesn’t need a lot of training data to get started. On the flip side, if the data is not linearly separable, this won’t work well.

- Fully-connected Neural Network is a simple but powerful algorithm which captures non-linear relationships. We can use sigmoid activation at the last layer to get probability scores. Log-loss could be used as the loss function. They are computationally expensive and need more data.

- Re-ranking service

- This service adds additional constraints for the final ranking like removing restaurants that may be closed at the time, etc. Re-ranking can also help ensure diversity, freshness and fairness.

- One approach is to use filters that remove some listings.

- Another approach is to manually transform the scores returned by the ranker.

Evaluation and Deployment

- Evaluation metrics

- Offline Metrics:

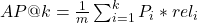

- MAP@k (Mean Average Precision)

- It gives insight into how relevant the list of recommended items are.

, where

, where  if

if  item is relevant else

item is relevant else  ,

,  = precision for top i recommendations, m = total number of relevant recommendations.

= precision for top i recommendations, m = total number of relevant recommendations.- MAP@k = mean of AP@k

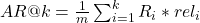

- MAR@k (Mean Average Recall)

- It gives insight into how well the recommender is able to recall all the items the user has rated positively.

, where

, where  if

if  item is relevant else

item is relevant else  ,

,  = recall for top i recommendations, m = total number of relevant recommendations.

= recall for top i recommendations, m = total number of relevant recommendations.- MAR@k = mean of AR@k

- MAP@k (Mean Average Precision)

- Online Metrics:

- Click-through rate (CTR), Time Spent, User Engagement

- These could be directly tied back to the business objective.

- Offline Metrics:

- Setup train, test, validate partitions (to prevent overfitting)

- We could split the 30-day training data we have into training, validation and test set. Sequence is important here, so we could use the first 20 days of data for training, next 5 days for validation and the last 5 days for testing.

- If we decided to resample the training data to handle class imbalance, we should not evaluate our model on resampled data, since it will cause the model to overfit to that resampled distribution.

- A/B test setup

- We could use the existing model as control group and the new model as test group.

- In a big company, many people may be running A/B experiments with their candidate models. It’s important to make sure your results are not getting affected by other experiments. It’s good to create multiple universes consisting of external users where all experiments corresponding to a particular feature/project are run in the same universe. Inside a universe, an external user can only be assigned to one experiment, thus making sure multiple experiments from the same universe won’t influence each other’s results.

- After doing sanity check for any config errors, we route half the traffic to control group and wait for a week before analysing the results.

- We could use chi-squared test or two-sample test to see if the results are statistically significant and decide whether the new model improves on the previous model.

- Debugging offline/online metric movement inconsistencies

- It’s important to continuously monitor the ML system and have health checks in place and alerts enabled.

- We should ideally have dashboards showing the important metrics (both business and performance) and changes in these metrics over time.

- If these metrics go below a certain threshold, we should have an alerting system in place to notify the correct team.

- We should also have logging in place, which would help with debugging these issues.

- A common cause of drop in model performance is data distribution shift, when the distribution of data the model runs inference on changes compared to the distribution of data the model was trained on, leading to less accurate models.

- We could work around the problem of data distribution shift either by training the model on a massive training dataset with the intent that the model learns a comprehensive distribution, or by retraining the model (from scratch or fine-tuning) to align with the new distribution.

- Edge cases can also make the model make catastrophic mistakes and need to be kept in mind when debugging.