Problem Statement: Design a chat service like WhatsApp, Messenger, Telegram, Discord, etc.

Clarifying Questions

- How many users do we expect?

- WhatsApp currently has around 2.7 billion monthly users. We can assume 1 billion daily users.

- Are we building the product from scratch?

- Yes.

- Do we only support 1:1 messaging or do we also have group chats?

- Let’s start with 1:1 messaging only.

- Is this a mobile app or web app?

- Mobile app.

- Do we support just text, or do we also support sending images, videos, etc.?

- Just text.

- Do we support audio/video calls?

- No.

- Is there any limit on the size of message?

- 50,000 characters.

- Do we need to save the entire conversation history?

- Yes.

- Do we need end-to-end encryption?

- No.

- Do we want a double tick to show if the message was delivered and then blue tick if the message was read?

- No.

- Can the user login at multiple places at a time?

- No.

- Do we support multiple languages?

- Let’s start with just English.

- Do we need to think about user signup, authentication, push notification, etc.?

- Let’s assume that these services are there.

- Do we support searching the chat history?

- No

Requirements

- Functional requirements

- It should support 1:1 chat between users.

- It should display the entire conversation history, when required.

- It should display the user status: online/offline.

- Non-functional requirements

- Real-time chat experience with minimal latency.

- System should be highly available.

Back-of-the-envelope estimation

- Traffic

- Daily Active Users (DAU) = 1 billion

- Let’s assume that people send on average 50 messages daily, so total messages sent in a day = 50 billion

- Storage

- Let’s assume that on average, messages are 100 bytes in size.

- Total Storage for 1 day = 50 billion x 100 bytes = 5 TB

- As, we need to store all the conversation history, total storage for 10 years is approximately = 5 TB x 365 X 10 = 18.25 PB

- Here, we only calculated the size of messages. On top of this, we would also need to save the user id, timestamp, location, etc.

- Bandwidth

- Our service processes 5 TB of data everyday. This is the data that is sent from users to the messaging service and then back to users.

- So, we need same amount of upload and download bandwidth of 5 TB / (24 x 60 x 60) s = 57.87 MB/s

System APIs

send_message(auth_token, user_id, receiver_id, message, timestamp)

- auth_token: Used for authenticating API requests

- user_id: current user id

- receiver_id: user id of the receiver

- message: text content

- timestamp: time at which the message was sent

- returns a bool indicating success or failure of the operation

fetch_messages(auth_token, user_id, timestamp)

- auth_token: Used for authenticating API requests

- user_id: current user id

- timestamp: time at which last fetch_messages request was made

- returns a list of all messages sent to this user after timestamp that was passed as a parameter. Also, returns the new timestamp, which would be used in the next call.

Overall System

- A client connects to the chat server by opening an HTTP connection, when it needs to send a message.

- The keep-alive is efficient for this because the keep-alive header allows a client to maintain a persistent connection with the chat service. It also reduces the number of TCP handshakes.

- The server sends an acknowledgement back to the client, sends the message to the database server for storage and to the receiver client.

- However, this server to receiver communication is not straight-forward, since HTTP is client-initiated.

Let’s go into the details of how can servers deliver the messages to receiver clients.

- Polling (Pull model)

- The client periodically connects to the server to check for new messages. The server needs to keep track of the messages that need to be delivered.

- As soon as the client connects to the server, the server will deliver all the pending messages.

- If the client takes longer to connect to the server, this could cause increased latency.

- To minimise latency, the client would try to connect to the server frequently, but this would be inefficient and costly in terms of resources as a lot of times, there won’t be any new messages.

- Long Polling (Push model)

- In long polling, a client holds the connection open until there are new messages available or a timeout threshold has been reached.

- Once the client receives new messages, it immediately sends another request to the server, restarting the process.

- Sender and receiver may not connect to the same chat server. HTTP based servers are usually stateless. If you use round robin for load balancing, the server that receives the message might not have a long-polling connection with the client who receives the message.

- Also, a server has no good way to tell if a client has disconnected.

- For users that don’t chat much, making periodic connections after timeouts is still inefficient.

- WebSocket (Push model)

-

- WebSocket provides full-duplex communication channels over a single TCP connection.

- It provides a persistent connection between a client and a server that can be used for sending data at any time.

- The client establishes a WebSocket connection through a process known as the WebSocket handshake. If the process succeeds, then the server and client can exchange data in both directions at any time.

- The WebSocket protocol enables asynchronous communication between a client and a server with lower overheads, facilitating real-time data transfer from and to the server.

High-level Design

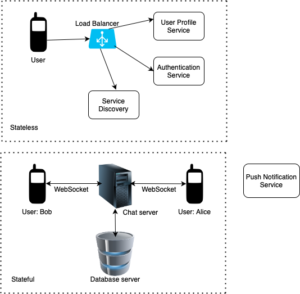

From our discussion in the previous section, we saw that WebSocket would probably be our main communication protocol between the client and server. Other features like sign up, login, user profile, etc of a chat application could use the traditional request/response method over HTTP.

Stateless Services

- Stateless services are traditional public-facing request/response services, used to manage the login, signup, user profile, etc.

- Stateless services sit behind a load balancer whose job is to route requests to the correct services based on the request paths.

- Service discovery is an important service which gives the client a list of DNS host names of chat servers that the client could connect to.

Stateful Service

- The chat service is stateful because each client maintains a persistent network connection to a chat server.

- In this service, a client normally does not switch to another chat server as long as the server is still available. The service discovery coordinates closely with the chat service to avoid server overloading.

Push Notification

-

It is a way to inform users when new messages have arrived, even when the app is not running, making it crucial.

- Status servers manage online/offline status.

Database

- In our chat system, we have user profile data, which can be stored in robust and reliable relational databases. Replication and sharding can be used to satisfy availability and scalability requirements.

- We also have chat history, which is enormous (as we saw in back-of-the-envelope calculation above).

- Most users tend to check recent conversation, and rarely look at very old messages.

- Users do at times search their messages for some content (although we are not supporting it currently), which also involves jumping directly to a particular message and neighbouring conversation.

- Read to write ratio usually is 1:1.

- We need to have a database that can support a very high rate of small updates, and fetch a range of records quickly. This is required because we have a huge number of small messages that need to be inserted in the database and while querying, a user is mostly interested in accessing the messages in a sequential manner.

- We cannot use RDBMS like MySQL because we cannot afford to read/write a row from the database every time a user receives/sends a message. When the indexes grow large, random access is expensive. This will make not only basic operations of our service to run with high latency but also create a huge load on databases.

- Key-value stores allow easy horizontal scaling and provide very low latency in accessing data.

- Facebook messenger uses HBase, and Discord uses Cassandra.

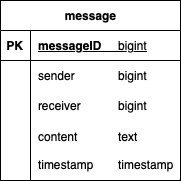

- Message table:

- We are going to use messageID to sort the messages in order as timestamp can be same for multiple messages. To guarantee the same order of messages, messageID should be unique and sortable by time (recent messages would have a higher value of messageID).

- In MySQL, we could use AUTO_INCREMENT keyword with messageID to generate a unique number automatically when a new record is inserted into a table. However, this is not present in NoSQL databases.

- We could use a unique id generator, which would generate a globally unique messageID in a distributed environment.

- We could use local sequence number generator. Local means IDs are only unique within a 1:1 chat. The reason why local IDs work is that maintaining message sequence within one-on-one channel is sufficient. This approach is easier to implement in comparison to the global ID implementation.

Deep-dive

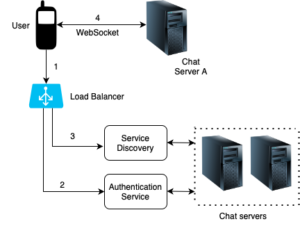

Service Discovery

Service discovery recommends the best chat server for a client based on criteria like geographical location, server capacity, etc. Apache Zookeeper is a popular open-source solution for service discovery. It registers all the available chat servers and picks the best chat server for a client based on predefined criteria.

- User A tries to log in to the app.

- The load balancer sends the login request to the authentication service.

- After the user is authenticated, service discovery finds the best chat server for the user (let’s say chat server A).

- User connects to chat server A through WebSocket.

Message flow

1. User Bob sends a chat message to Chat server A.

2. Chat server A gets the messageID from the ID generation service.

3. Chat server A sends the message to the message sync queue.

4. The message is stored in the database (key-value store).

5.a. If User Alice is online, the message is forwarded to Chat server B where User B is connected.

5.b. If User Alice is offline, push notification service sends a push notification to User Alice.

6. Chat server B forwards the message to User Alice. There is a persistent WebSocket connection between User Alice and Chat server B.

User Status

- Displaying the status of a user (whether the user is online) is essential for most of the chat applications.

- Status servers are responsible for managing user’s online status and communicating with clients through WebSocket.

- User login

- We already talked about this in service discovery. After a WebSocket connection is established between the client and the status service, user’s online status and last_active_at timestamp are saved in the database.

- Status indicator shows the user is online after login.

- User logout

- When a user logs out, the user’s online status is changed to offline in the database.

- The status indicator shows a user is offline.

- User disconnection

- When a user disconnects from the internet, the persistent connection between the client and server is lost.

- A naive way to handle user disconnection is to mark the user as offline and change the status to online when the connection re-establishes. However, this approach has a major flaw. It is common for users to disconnect and reconnect to the internet frequently in a short time. For example, network connections can be on and off while a user goes through a tunnel. Updating online status on every disconnect/reconnect would make the presence indicator change too often, resulting in poor user experience.

- We could use a heartbeat mechanism to solve this problem. Periodically, an online client sends a heartbeat event to status servers. If status servers receive a heartbeat event within a certain time, say x seconds, from the client, a user is considered as online. Otherwise, the user is offline.

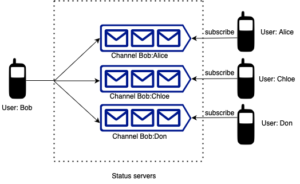

- Online status fanout

- How do a user’s friends know about the status changes?

- Status servers may use a publish-subscribe model, in which each friend pair maintains a channel. When User Bob’s online status changes, it publishes the event to three channels corresponding to his three friends: Alice, Chloe, Don. Those three channels are subscribed by these users. Thus, it is easy for friends to get online status updates. The communication between clients and servers is through real-time WebSocket.

- The above design is effective for a user with small number of friends (say under 500).

- For larger groups of friends, informing all members about online status is expensive and time consuming. Assume a person has 100,000 friends (An extreme scenario in general, but if we allow groups in future, this may happen). Each status change will generate 100,000 events. To solve the performance bottleneck, a possible solution is to fetch online status only when a user manually refreshes the friend list.

Data Sharding and Replication

- We need to distribute the chat data onto multiple database servers as we are storing a lot of data.

- Sharding based on messageID

- If we shard based on messageID, different messages of a user would be stored on separate database shards and fetching a range of messages of a chat would be inefficient.

- Sharding based on UserID

- Let’s assume we partition based on the hash of the UserID, so that we can keep all messages of a user on the same database. If one DB shard is 4 TB, we will have 18.25 PB / 4 TB ~= 4560 shards for ten years. For simplicity, let’s assume we keep 5000 shards. So we will find the shard number by “hash(UserID) % 5000”, and then store/retrieve the data from there. This partitioning scheme will also be very quick to fetch chat history for any user.

- In the beginning, we can start with fewer database servers with multiple shards residing on one physical server. Since we can have multiple database instances on a server, we can easily store multiple shards on a single server. Our hash function needs to understand this logical partitioning scheme so that it can map multiple logical partitions on one physical server.

- Since we will store an infinite history of messages, we can start with a big number of logical partitions, which would be mapped to fewer physical servers, and as our storage demand increases, we can add more physical servers to distribute our logical partitions.

Caching

- Caching chat messages and some user data on the client-side can significantly enhance user experience. It allows users to access recent messages even when offline or with a slow network connection.

Monitoring and Error Handling

- Chat server error: There might be hundreds of thousands, or even more persistent connections to a chat server. If a chat server goes offline, service discovery can provide a new chat server for clients to establish new connections.

- Message resent mechanism: Retry and queueing are common techniques for resending messages.

Security

- Having end-to-end encryption would be good in our chat service. WhatsApp supports end-to-end encryption, where only the sender and the receiver can read the messages.

- For more details on WhatsApp’s end-to-end encryption, please refer to the WhatsApp Security Whitepaper.